Chasing big data? You're going to need a bigger boat

Bandwidth is as important as algorithms as the military's wide array of sensors collects huge data sets.

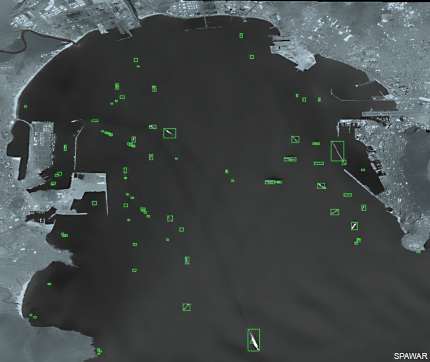

Thousands of sensors are constantly collecting information, which can strain a network.

Devising architectures and writing software programs to analyze all the information being collected by military systems and personnel is a huge challenge, but its immensity is matched by hardware issues. It takes a lot of bandwidth and storage space to get data into systems that can turn that data into useful information.

Military and corporate strategists are racing to deploy big data analytics in the field. As these tactics emerge, developers must begin by looking at all the elements that need to be linked.

“There’s data from UAVs, manned vehicles, weapon systems, heads-up displays on helmets and many other sensors,” said Peter Tran, senior director of RSA’s Advanced Cyber Defense Practice.

Most of these sensors are being enhanced to provide better resolution so analysts can glean more information from each image. Moving this data over complex networks that include satellites and wireless networking schemes as well as conventional cabled techniques is a growing challenge.

“One image can be several gigabytes,” said Heidi Buck, head of the Automated Imagery Analysis Group at the Space and Naval Warfare Systems Center, Pacific (SSC-PAC). “You need a huge pipe to handle everything. Video images are getting larger, resolution is going up and frame rates are getting faster.”

As defense industry planners add more bandwidth, they are also working together to ensure that all the networks are compatible. If various military services use their own architectures and protocols, cooperation between groups may be slowed while data is converted.

“We want to provide interoperability among systems so we can make a repository for data from unmanned aerial and ground vehicles and undersea vehicles,” said Thomas “Lee” Zimmerman, head of the Communication and Networks Department at SSC-PAC. “We want to greatly reduce the number of interfaces needed to share data. This also allows us to aggregate volumes of data in a heterogeneous data storage with imagery and other formats.”

The need to store information is growing, driven in part by the ability of big data analysis schemes that can scrape tiny bits of data together to detect commonalities that might otherwise be difficult to find. Storage is also required in remote areas where connections may be lost, for example when a major storm blocks satellite communications that provide the only link in remote areas.

“Ships can be disconnected or have intermittent contact, so we have to determine whether to have all the data on or off the ship and what we can communicate with satellites,” Zimmerman said. “We’re talking terabytes of data that we have to replicate and synch. It’s a challenge to direct the right data to the right place. Ships don’t have unlimited space for storage and processors.”