On Nov. 3, the #stopthesteal false narrative took just minutes to spike and start to spread. Zignal Labs

Misinformation 2020: What the Data Tells Us About Election-Related Falsehoods

Here are urgent lessons from the year’s most-spread false themes — and the ones going viral right now.

We can’t fully understand the 2020 election and its current aftermath without understanding how misinformation flowed — and continues to flow — through the body politic. So what does the hard data tell us about the online information warfare that targeted American voters?

Drawing upon Zignal Labs' comprehensive access to diverse digital media data sources ranging from Twitter to Facebook to Reddit, blended with machine intelligence, one can surface the numbers beneath the most pervasive misinformation stories about the 2020 US election (As the intent of these unverified stories is sometimes hard to establish, it is more appropriate to call them “misinformation” — though many clearly appear fueled by intentional disinformation campaigns). The data shows that falsehood was rife, and was propelled in different ways than 2016.

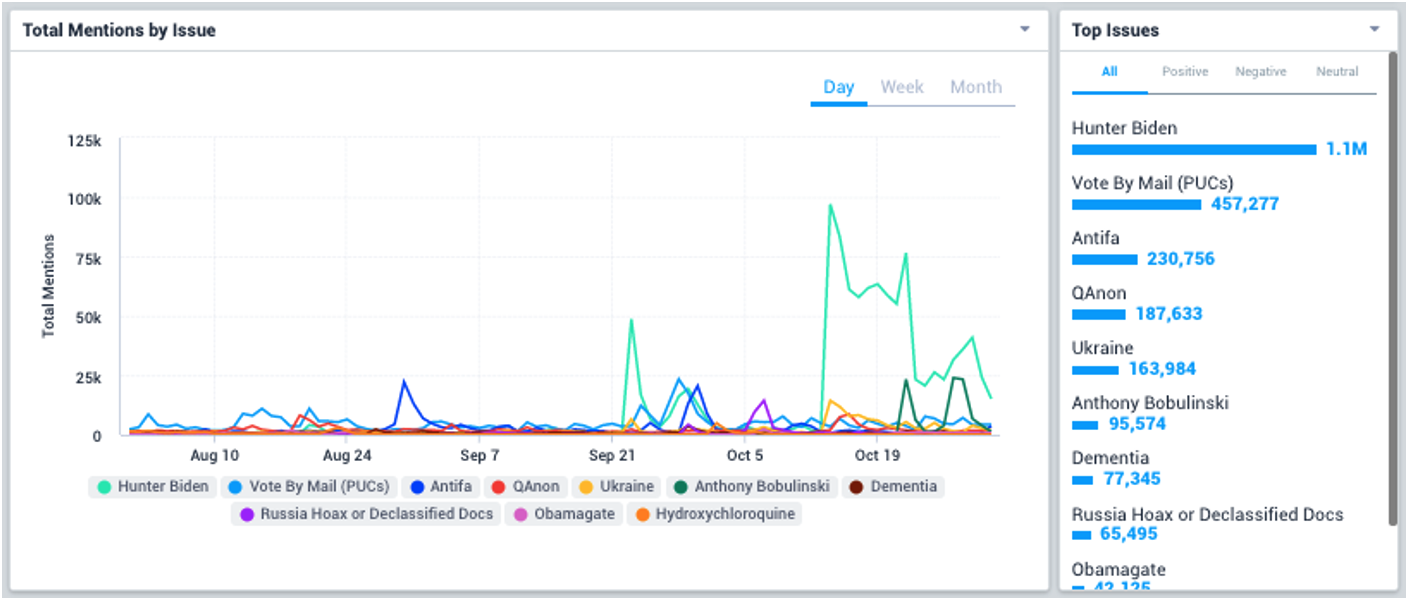

Of all the false stories and conspiracy theories spread from the political convention season this summer to the final weekend of the election, four themes appeared most often:

- One of the most recent was also the most shared: The debunked Hunter Biden narrative (the “laptop” story disavowed by major media) saw 31.3 million mentions across social media.

- False claims of voting by mail fraud were shared steadily over the months, for a total of 14 million mentions.

- Inaccurate claims of antifa violence (claims belied by data on violent acts and mass killings in the U.S. overall and during the protests this summer) had 7.2 million mentions.

- The baseless, ever-changing Qanon conspiracy theory received 6.2 million mentions.

Leading misinformation themes about the 2020 U.S. election, Aug. 1 to Oct. 31 (Zignal Labs)

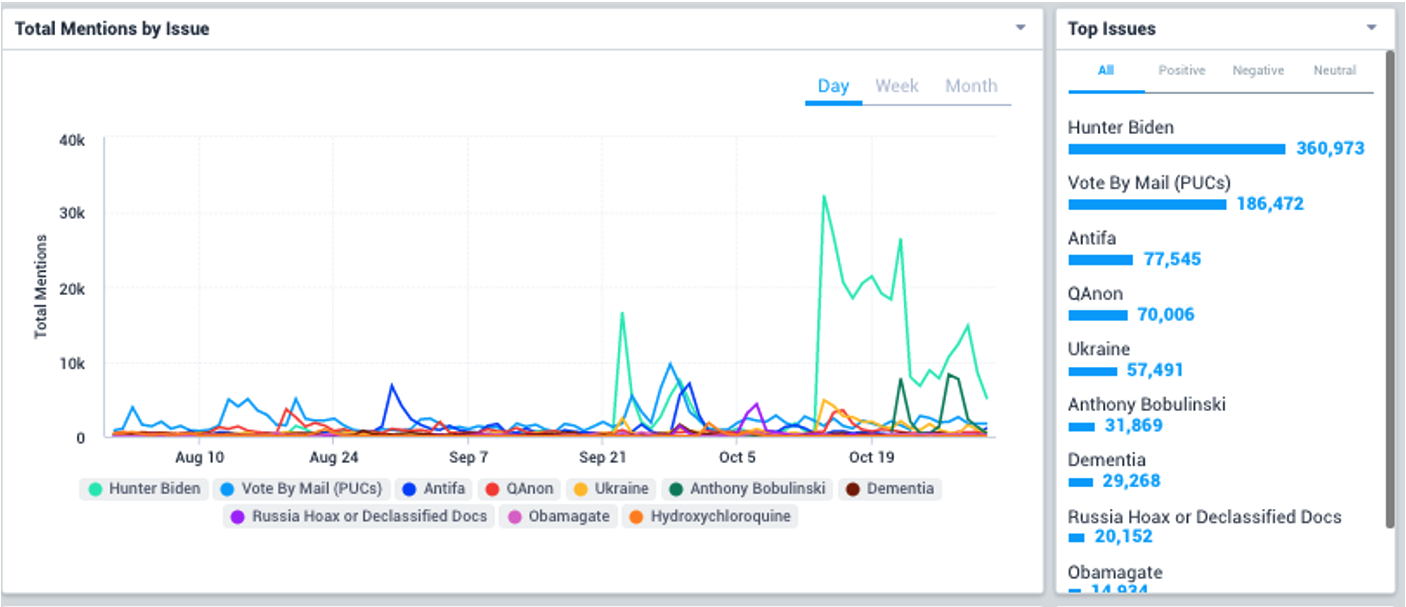

These and other misinformation themes garnered tens of millions of mentions — a significant and pernicious slice of the billion-plus total mentions of election-related themes. These falsehoods were consumed by audiences across the country, but unevenly, especially targeting swing states. The top three states for mentions of misinformation were the swing states of Pennsylvania, Michigan, and Florida. It is still far too early to tell, but this consumption of misinformation may help explain why pollsters’ estimates about the election have been off. For example, the false Hunter Biden narrative had almost twice the per capita traction in Florida than Pennsylvania.

This parallels other reporting that Spanish speakers clustered in Florida were a focus of an incredibly energetic and organized effort to spread baseless allegations and aid the Trump campaign. “In just 24 hours, Spanish-language disinformation was generating traffic that eclipsed even the interference campaign by the Kremlin-backed Russian Internet Research Agency four years ago,” the New York Times reported Wednesday.

Leading misinformation themes about the 2020 U.S. election discussed within Florida, Aug. 1 to Oct. 31 (Zignal Labs)

Leading misinformation themes about the 2020 U.S. election discussed within Pennsylvania, Aug. 1 to Oct. 31 (Zignal Labs)

This points to a second key finding. The data show that the companies that run social media platforms are managing toxic discussion trends better this year than in 2016 — not a high bar to reach — but still lag far behind the scale of the problems. After years of external criticism and internal soul-searching, the companies altered numerous policies and invested far more organizational resources into the battle, and it showed. They undertook a diverse array of interventions into what was allowed on their networks, both seen and unseen, from taking down Russian and Iranian troll accounts to marking false statements by U.S. leaders on certain election-related topics. In this effort, the platforms were aided by one of the largest perpetrators of election-related misinformation. Like a movie villain who can’t help explaining his plot, Donald Trump has been talking and tweeting for weeks about his plans to push false claims about mail-in voter fraud. Forewarned, Twitter was ready to apply warning labels to false and misleading tweets. The day after the election, the president set a new kind of record in having more than half tweets thusly flagged.

Yet for all that effort, social media platforms remain a battlefield on which too much misinformation thrives and those who push it find too little resistance. Twitter, YouTube, Facebook do not sufficiently coordinate their policies, allowing disinformation dealers to “forum shop” and take advantage of the policy seams. Newer platforms such as TikTok, meanwhile, have not built up the same anti-misinformation processes, and remain relative “Wild West” hubs for false claims of election fraud. (#riggedelection , for example, was garnering well over 1 million plays a day.) And no platform appears to have taken effective action against various low-hanging fruit like easily identifiable bots.

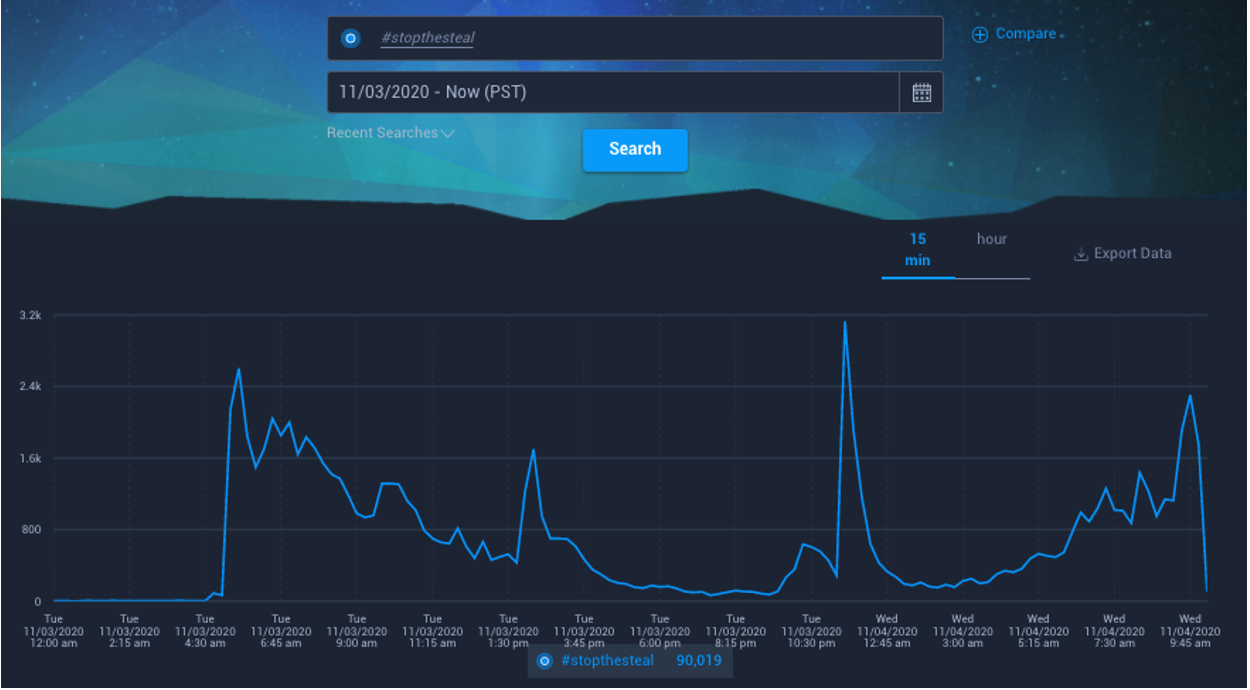

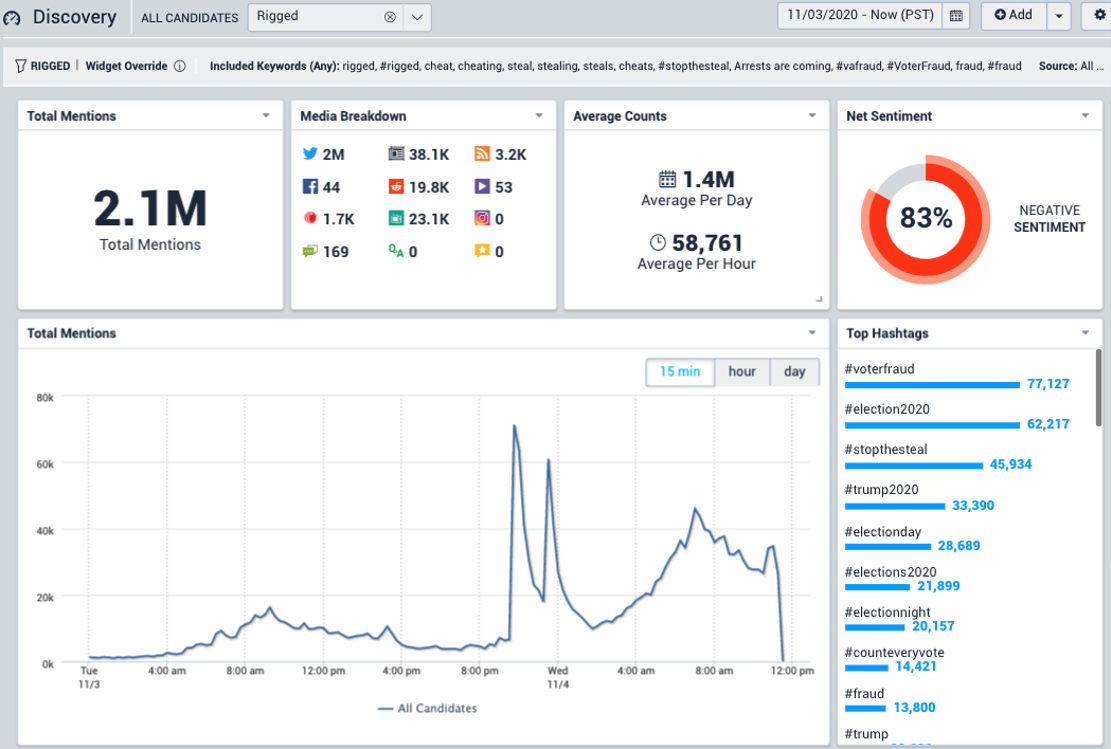

The result is that too much misinformation can still rapidly go viral, with too little effort. On Nov. 3, for example, the #stopthesteal false narrative took literally minutes to spike and then spread.

#StopTheSteal mentions over 24 hours starting 10 a.m. PST on Nov. 3. The late-Tuesday spike was launched by a viral Tweet from a verified user. Twitter marked the tweet as misleading.

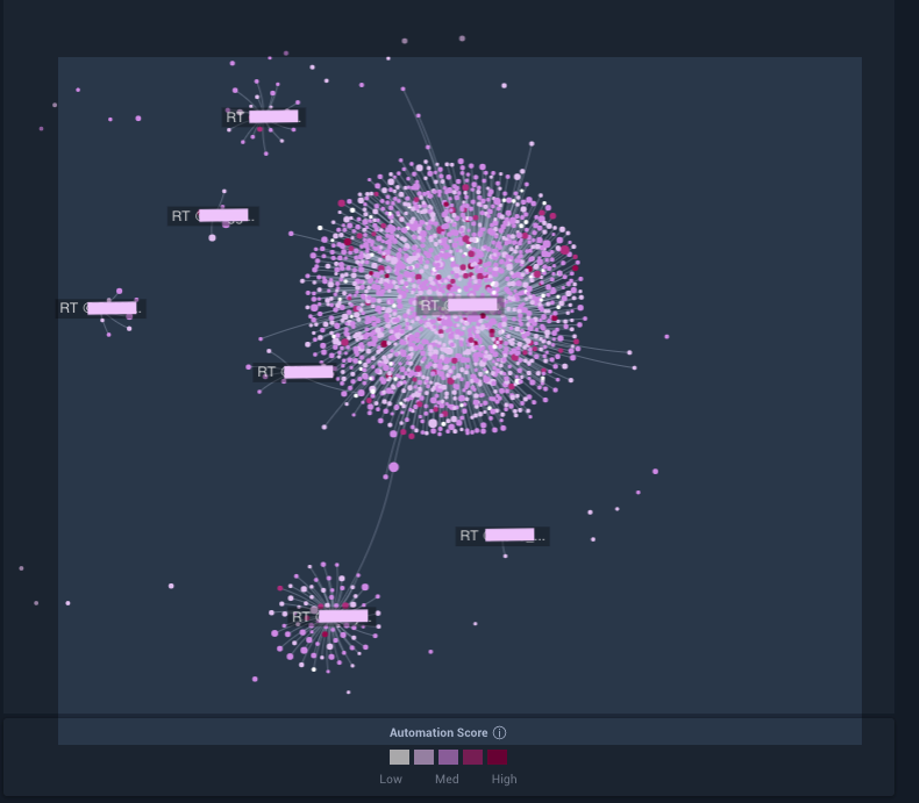

The network structure of the Nov. 3 evening spike indicates that its propagation was largely due to a single account. The large cluster shows retweets of the original tweet.

The data thus also reflects a horror-movie trope: “The killer is inside the house.” In 2016, Russia drove U.S. media narratives through hack-and-dump operations, and then shaped online discussion via thousands of bots and trolls. But 2020 election-related misinformation was mostly a domestic affair. Iran was accused of mounting a campaign in Florida and Russia was documented to have amplified QAnon, but their effect on the overall election appeared modest.

This may be partly due to the social-media companies’ own efforts, and partly due to the U.S. intelligence community, which undertook a variety of operations to hinder or deter foreign interference. Yet, as Gen. Paul Nakasone, the head of the NSA/Cyber Command, reminded us on Tuesday, we are not out of the woods yet. Most experts agree, believing that nations like Russia are far from deterred. Rather, they may simply be keeping their powder dry, as American misinformation purveyors do their work for them, far better than they could do from afar.

This leads to the next key finding: The combination of polarized politics and siloed media ecosystems has created dueling information bubbles in America. It is not just that half the country believes that democracy is at risk and the other thought that their lives had improved amid a pandemic, but that these bubbles have been proven to be fairly manipulatable. Combined with a dated electoral system, it is a recipe for continued fights and dysfunction in our democratic system. To put it in public-health terms, what the Kremlin injected in 2016 has metastasized into an infection that has compromised the immunity of our body politic to any and all information attacks.

Mentions of “Rigged” or “Stolen” election on Nov. 3. The first spike represents misinformation themes that emphasize that the Democrats are trying to steal the election. The second spike came from the #stopthesteal hashtag.

This is where much of the concern over the coming days and weeks and even years should be. The data shows the growing longitudinal trend of the conversation around a “rigged” or “stolen” election. For instance, on Wednesday, a “Stop The Steal” group on Facebook set up by The Liberty Lab, a firm “that offers digital services to various conservative clients,” was claiming a wide range of baseless election fraud theories. Despite the claims being false, it was adding about 100 members a second. [Update: Facebook reportedly shut the group down on Thursday, after it gained 300,000 members in two days.] Given the increasing volume of this theme, we can expect online efforts to provoke protracted post-election instability.

The misinformation data points to a need for continued policy adjustment by the platform companies and vigilance by those in government, especially as this activity tips towards urging physical protest and violence. The platform companies must finally come to grips with something they have long avoided, how to handle prominent “superspreader” accounts that they know are violators but fear the backlash over enforcing their own rules. As Alex Stamos, formerly Facebook’s chief security officer, put it, “You have the same accounts violating the rules over and over again that don’t get punished.”

It also points to the desperate need for the U.S. to improve its long-term resilience to disinformation. This goes beyond changes in software and legal code. Certainly, the platforms must calibrate their recommendation algorithms that continue to drive viral misinformation. So too, the government should update how it oversees and even regulates the industry and its roles in election media. But there is also a need to better equip the targets of such campaigns: us. Earlier this year, Carnegie looked at 85 policy reports from 51 organizations that examined what to do about the problem of online misinformation. By far, the most frequently recommended action item across all the groups (53%) was to raise U.S. digital literacy. Unlike nations such as Estonia or Ukraine that experienced and then built up resilience to online threats, however, the U.S. still has no true national effort on the problem. Our school systems and citizens are mostly on their own to learn how to discern between truth and falsehood online. Both government and the nonprofit worlds must move forward on enabling a new type of needed cyber citizenship skills.

This is hardly just an issue for kids. Research shows Baby Boomers spread misinformation at seven times the rate as those under 30. And too many in the media still intentionally or unintentionally help spread disinformation, elevating and enabling it. Too many ignore lessons learned and best practices developed in recent years, while analytic tools and data investigative training (such as those provided by such groups like First Draft News) are insufficiently deployed. Part of this is due to the partisan evolution of the media business. And part is due to a continued division between the journalism fields of those who report political campaign stories and those who have the cybersecurity-and-disinformation beat, despite the fact that they are now clearly melded.

There is still much to be learned about an election season that is still very much ongoing, but the overall data on misinformation is a stark reminder that the problem of hacking social media remains with us. It is not just a constantly mutating threat to authentic discourse and informed debate in our politics, but an “infodemic” that makes battling public health threats even more difficult. Each of the tactics and trends we saw targeting votes is already readying to target vaccines.

The battle for the presidency may soon be done, but online war will continue on.